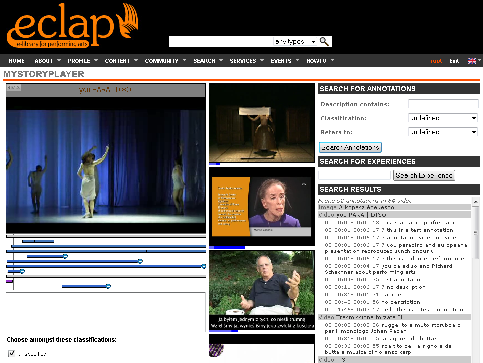

MyStoryPlayer model and tools allow to execute personal experiences as non-linear stories by following temporal and logical relationships formalized via semantic annotations. In MyStoryPlayer, any media segment can be an annotation for another media element, the single media may be used as a basis to create an infinite number of media annotations. The solution has been studied and developed as a generalization of the models describing non-linear stories and navigation experiences, as one would appreaciate in navigatin on serials such as Lost, FlashForward, Odissey5, Doctor Who, etc. The user may navigate in the audiovisual annotations, thus creating its own non-linear experience. The resulting solution includes a uniform semantic model, a semantic database, a distribution server for semantic knowledge and media, and MyStoryPlayer to be used in web applications. MyStoryPlayer is presently used in ECLAP, http://www.eclap.eu.

The solution has been also tested on a number of scenarios related to the modeling of non-linear story telling. An example is also accessible via web for the reviewers of this paper (http://www.mystoryplayer.org). The solution may be adopted for modeling educational materials such as those that can be created for medical and theatrical environments. In both cases, scenes are typically recorded from more than one point of view, additional explanation videos have also to be added to show all the historical aspects (on different time and spaces) or they could be related to the basic technologies/interpretations or comparison.

Download the MyStoryPlayer Flyer.

see:

- Video on Youtube http://www.youtube.com/watch?v=QHRFp9XN-Ok

- MyStoryPlayer page on ECLAP

- User Manual on which you can see nice examples.

- how to create automatic synchronization and sequences

- Slides presented at the First ECLAP Workshop

- Slides with audio and video, presented at the first ECLAP Workshop

- Document describing MyStoryPlayer, Collections, Playlists: Content aggregator tools in ECLAP Project

- http://www.mystoryplayer.org The purpose of that portal is for demonstrating research results ONLY. Therefore, we have used videos and content in strongly degradated formats, the copyright of the content is of FOX, while the content has been found on Internet. We would like to thanks them. Please let me know if we have to remove the material by sending an email to the coordinator as reported below. Sorry again for any problem that we may have caused.

Example for ECLAP with Dario Fo: http://www.eclap.eu/3748

User manual:

MyStoryPlayer is a tool allowing the user to build his non linear narrative experience through annotations upon multimedia objects such as video, images and audio. The innovative part of MyStoryPlayer lies in the fact that no difference between media and the user’s annotation exists, because both categories are referred to multimedia objects and they are temporally connected. Moreover, as annotations are multimedia audiovisual, the possibility of both multiple contemporary views and multiple choic es on what to watch is provided to the user, thus giving him a sensation of freedom in building his personal narrative experience. Every time the user chooses a video to be played, rectangles appear just below the temporal line to indicate the length of annotations according to the duration of the main playing video.

When the main video is running, another video or more media (which can be video, audio or images) start playing aside from the main video area. These media are connected with the main video for the time represented by the length of the annotation, afterwards they disappear. During the time they are active, the user can keep on viewing the main video or he can click on the other video represented by a side annotation. This latter action introduces a swap between the two videos, while new contents are loaded in association with the new main media.

Every time a swap action is executed, user can go back to previous step simply by clicking on the Back button, just like it occurs with any web browser. This aspect brings forth more flexibility to the application and freedom to user, because every time he clicks on a video, he makes a step in the connected structure of the ontology.

The annotated scenes contain pieces of information about the characters, the objects, the place where the scene is set, and a temporal reference. Every type of entity belongs to the ontologic model, so as to make possible any query submitting on the model about scene description.

The system provides a collection of videos from the famous American television series Lost; the collected videos are annotated semantically, in order to provide the user with much information about each scene.

With this assertion a user can perform queries like: all the scenes where John and Jack appear and they are on the beach, or every scene occured before the second crash on the island… and so forth.

On the server side there is an ontology built in RDF and OWL languages; such ontology models the semantic relationships between media and annotation according to their temporal lines. This ontology has been defined and developed to create a model where annotations and resources refer to the same concept of media, thus giving a temporal and semantic connection to multiple scenes belonging to many videos.

Other applications

With MyStoryPlayer it is possible to fit this ontological model for many purposes, especially for didactical environment or for videoconferencing. An example can be in didactics, with a video where the speaker appears, and aside there is a slideshow following in sync the delivered speech. Another example can be found in the videoconferencing environment, where it is possible to register many streams and propose them in sync and dynamic modes. For example as to the medical environment, it is possible to explain technical aspects of surgery or explain how some new medical machines work.

Search for annotation

To submit these types of query, user can choose from the initial mask of the site www.mystoryplayer.org among the categories used previously to annotate scenes. The categories are: Places, Characters, Objects, and SceneDates/events. For characters and object it is possible to submit query where one or more elements of these appear, because they can be multiple in a scene. This can be done by clicking on ‘+’ button next to the research mask element. Apart from the category values t here are two more default values the user can choose. They are undefined and any. The first one means that a value for that category is not required, the second one means that any value for that category must appear in annotations. In the sceneDate section, the user can make a temporal query on the ontology, by selecting scenes before, at or after a specific annotated event. In the last section of the query you can select which media must appear into the reference. Once the mask is filled in, the user can click on the searchAnnotation button and all the results of the query will appear in a table, just below the mask, according to video they belong to. If the user clicks on the video result, the player will start from the very beginning of the video; whereas if the annotation result is clicked, the player will start from the chosen annotation. During execution, the user can click on any other results of the previous query or submit a new query to the database.

Record and save an experience

This feature is provided to give the user the chance of recording every step he takes along his personal experience on MyStoryPlayer, in order to share and make it available for further research. This can be done whenever on the player, simply by clicking on the red button. Once the red button has been clicked, the player begins to record every step made by the user on the player, like swap, seek on the temporal line or back. Moreover, clicks are registered on the results of the previous query, or on any another query results performed during the execution, both in video results and in annotation results. Once the user has decided to end his experience, he clicks on the stopRec button and he can save his personal experience filling in a pop up dialog box with the name / title of the experience and a short description. If he wants to replay before saving, he can watch again his experience on the player. If he wants neither to save nor to view replay, it is enough to click on cancel and he will be brought back to the player session. Experiences are saved as RDF on the server where they are kept as available for further research on the front end.

Search for experience (Provisional)

To perform search of previously recorded experiences on the server, there is a box where you can execute a full text research on the title of the experience. Results are provided with complete title of the experience, a description of it and the date of its creation. When c licking on result, the player starts with the experience and it automatically makes every step previously registered. During replay it is possible to exit from session, make normal actions (like swap, back, seek, perform other queries on experience or annotations) and record another experience.